For most of its history, AI has lived in a reversible world. Models could be retrained. Outputs could be corrected. Failures could be patched. Even serious mistakes, biased decisions, flawed predictions, or hallucinations remained largely informational. They could be reviewed, debated, and often undone.

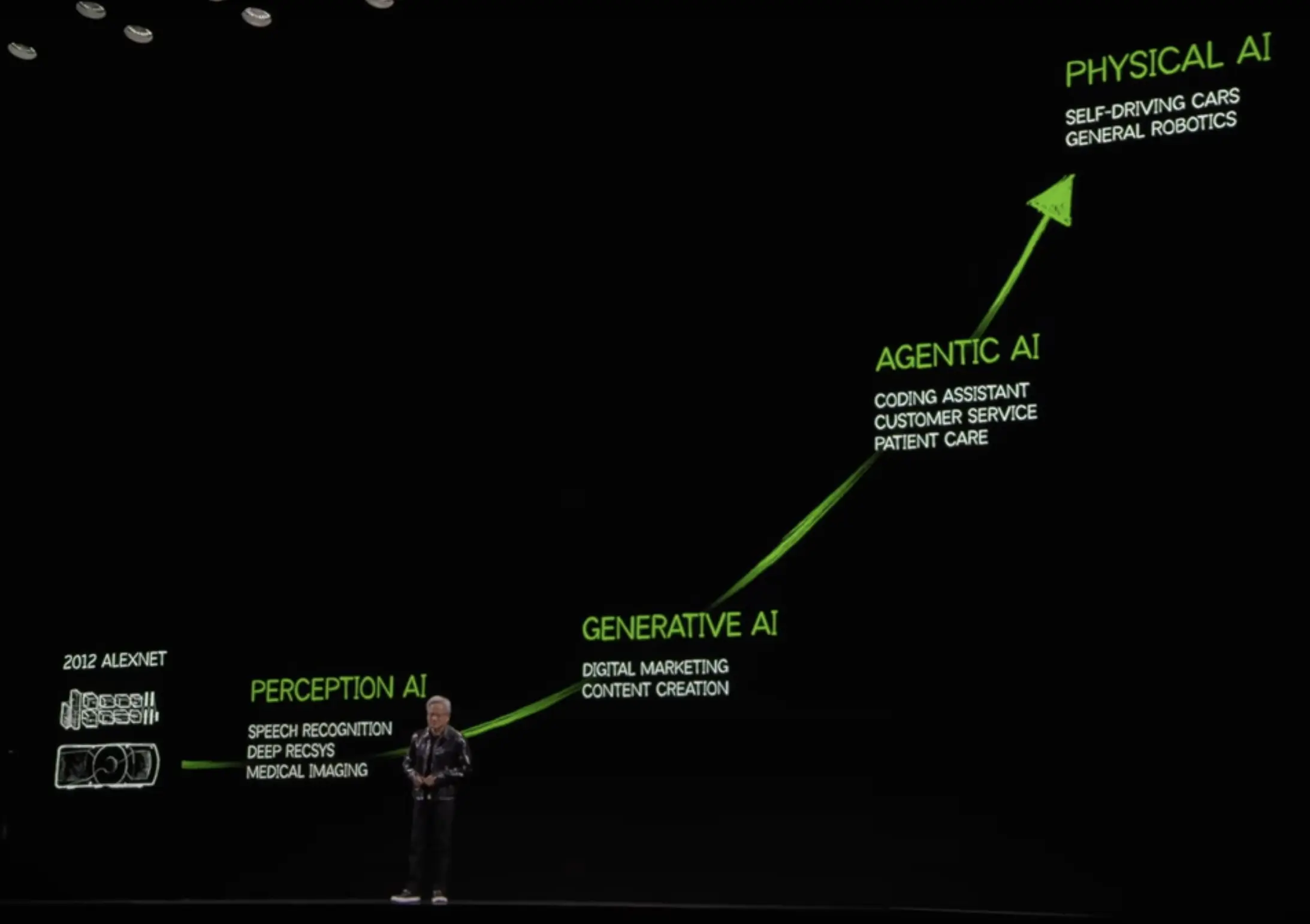

Physical AI changes everything. When intelligence leaves the screen and enters the real world through robots, autonomous vehicles, drones, medical systems, weapons, or industrial machines, actions become irreversible. A robotic arm strikes a worker, a self-driving car misjudges a pedestrian, a surgical robot reacts too late, the consequences cannot be undone. Once intelligence gains a body, time, physics, and consequence reclaim authority.

Physical AI faces extreme uncertainty. Sensors fail, environments shift, humans act unpredictably. Yet decisions happen in milliseconds. A self-driving car cannot stop to debate ethics. A surgical robot cannot wait while tissue deteriorates. Here, intelligence is measured not by correctness, but by judgment under extreme constraints.

Alignment becomes far more complex. In software AI, misalignment often shows as wrong outputs or undesirable behaviors that can be corrected. In physical AI, misalignment propagates through matter. A single poorly specified objective like optimizing speed over safety or efficiency over robustness, can cause irreversible harm. Governance cannot remain external. Ethics must be embedded in architecture, control loops, materials, and fail-safe mechanisms. Design choices themselves become moral decisions.

Embodiment reshapes intelligence. Physical agents learn not only from data, but through interaction and environmental constraints. Their bodies shape what they can perceive and how they act. A humanoid robot, a warehouse manipulator, and an underwater drone may share algorithms, yet their intelligence behaves differently because their forms enforce different realities. Intelligence becomes situated, contextual, and inseparable from form.

At scale, physical AI systems will manage infrastructure, navigate among humans, and act autonomously in shared spaces. Small errors can cascade into systemic failures. Old AI debates of bias, misinformation, hallucinations are no longer enough. Physical AI forces new questions. How do we design systems that fail safely? Which decisions must remain “human by design”, not policy How do we encode restraint, not just capability?

Bottom Line

When intelligence meets irreversibility, there is no rewind button. Physical AI acts in the real world, leaving permanent consequences. Mistakes are costly. The stakes are high, the margin for error is small, and ethical design, foresight, and oversight are more critical than ever.